Article

Roundup: Bluetooth Medical Devices Cleared by FDA in 2024

eBook

The medical device industry is facing unprecedented challenges due to emerging technologies and increased regulatory scrutiny. Current “waterfall” product development methods are ill-suited to deal with the pace of change and uncertainty that product development organizations are facing. This eBook addresses:

The Need for Speed

“Many companies believe that there is significant pressure to enter a device market early to maximize payoffs due to intense competition.”[1]

Complexity in Medical Devices

“Thirty years ago, the medical device industry essentially made simple tools. Today new innovations are becoming increasingly complex, driven by the advent of new technologies.”[1]

Understanding Barriers to Medical Device Quality, FDA’s Center for Devices and Radiological Health (CDRH)

Driven by the increasing sophistication and complexity of medical devices and the environment in which they operate, an increasing share of device functionality and value depends on software.[2]

A passage from a US Food & Drug Administration report in October 2011 illustrates this increase in complexity:

“… an insulin pump in 2001 could be programmed to deliver varying amounts of insulin throughout the day. Now, a more compact pump communicates via radio frequency to a continuous glucose monitor and suggests insulin dosing using an algorithm. “[3]

Software has become a key differentiating factor in the industry. It is simply faster and easier to develop and deploy software than it is hardware. Companies that embrace this can drive more rapid innovation to take advantage of new opportunities.

The importance of software as a driver of value creation is likely to be magnified by a series of interrelated, mutually amplifying trends:

These trends will affect device and diagnostics firms to varying degrees, but collectively, they will have a significant impact on how medical device and diagnostic firms create and capture the value and how they compete and innovate:

The impact of these trends has begun to make traditional methods of software development obsolete, and put those that hold on to traditional methods at a competitive disadvantage. On the other hand, they also create tremendous opportunities for growth, new products and new lines of business for those who can adopt new methods to take advantage of them.

Medical Devices and Diagnostics have typically had long product development cycles, often 3 to 5 years. Pharma cycles have been even longer. Software development cycles tend to be much shorter in most industries, and have been shrinking over time.

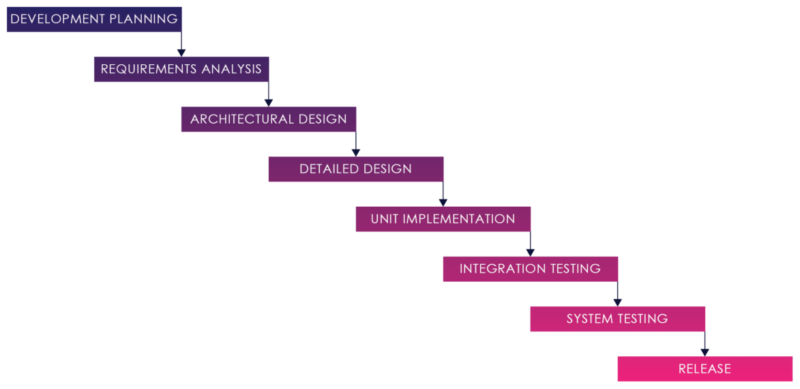

Figure 01: Waterfall Process

The Software development strategy for medical devices has traditionally been a “Waterfall” model. The Waterfall development model originates in the manufacturing and construction industries both highly structured physical environments and where after-the-fact changes are prohibitively costly, if not impossible.

In this strategy, each activity, from requirements analysis through systems testing is performed and completed before the next activity begins.

This process can work when requirements are well understood and well defined, and there are no changes, hidden complexities, undiscovered requirements, or defects.

The reality is that there are very few cases where requirements don’t change throughout the course of product design and development. This is true for the medical space just as much as it is for other industries.

The FDA acknowledges this in its Regulatory Information Guidance:

“Software requirements are typically stated in functional terms and are defined, refined, and updated as a development project progresses.”[5]

For complex systems, it is rare if not impossible to be able to define requirements completely before starting design and development work. Additional requirements are typically discovered during design and development activities.

This is especially true for systems with significant user interface elements. One reason for this is that traditional requirements documents are a poor way of conveying and getting feedback from actual users, customers and other stakeholders. They are often not read, and if read, not fully understood. Instead, requirements often emerge as users interact with prototypes and actual products.

As noted in the previous section, user experience design plays an increasingly important role, and user design expectations have been significantly raised through their experience as consumers. Designing user experiences is difficult, and requires feedback from users to get right.

A waterfall process locks the design into a set of requirements developed long before a solution is realized and a product gets into the hands of users. Limited end-user engagement means an increased risk of implementing a suboptimal solution.

As one senior director at a large medical device firm noted to me:

“In our current process, by the time people see it, it’s done, and it’s difficult to change.”

One of the fallacies of traditional waterfall development is to treat software development as a production activity. In software development we are creating the first instance of a product, not copying a piece of software that has been previously designed, developed and put into production. In software development, development is actually a detailed design activity. The need to revisit requirements and design specifications during development is frequent. As a development manager at a major device firm noted to me:

“Many of the [system’s] details only become known to us as we progress in the [system’s] implementation. Some of the things that we learn invalidate our design and we must backtrack.”

For physical devices, the detailed design process typically involves significant changes as the device is designed and prototyped and feedback is incorporated. Whereas for a physical device, production is a critical, costly, and time-consuming activity, for software, it is negligible.

In a complex system, one bug may hide another. Your codebase grows over time, increasing the chance that any change that does occur will touch more things later in the project. The longer the development process proceeds before testing, the greater the risk of multiple integration conflicts and failures. This makes it exponentially more difficult to find and fix problems and to maintain quality.

To add to this, in complex systems, changes discovered about one part of a system in one stage may well change earlier and later stages for other parts of the system.

Outside changes, whether driven by technology, regulations, competition, or demographic forces are inevitable and accelerating. Software often requires hardware (such as sensors or diagnostics), via protocols (like Bluetooth or Zigby), on operating systems (like Android, iOS or Windows), or third-party software (like EMRs or LIS). These integrations and dependencies can often be moving targets, and often introduce a significant change to requirements, design and development.

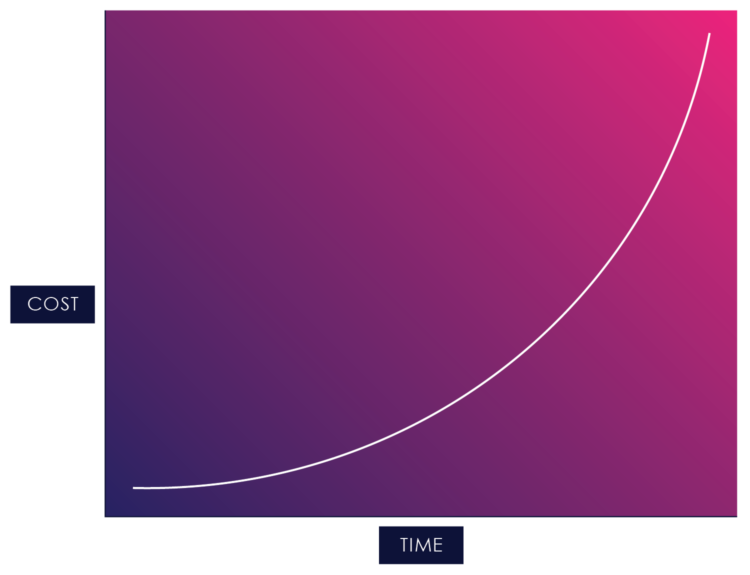

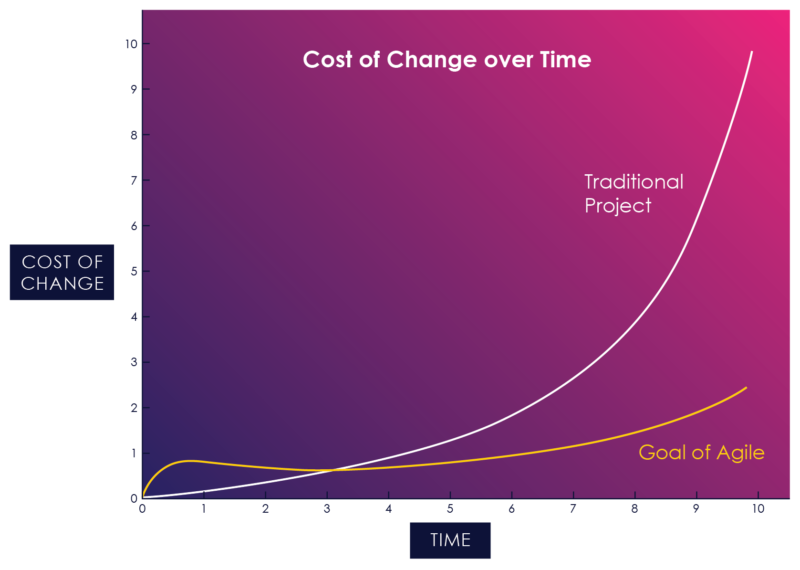

Once you start adding change into the mix, you start running into waterfall development’s cost of change curve:

Figure 02: The Cost of Change in Waterfall

The cost of change curve shows the relative cost of addressing a changed requirement at different stages of the product life cycle. The cost of fixing errors increases exponentially the later they are detected in the development lifecycle because the artifacts within a serial process build on each other, and each previous activity needs to be updated when an error, missing requirement, or suboptimal design is detected.

Missing or incorrect requirements are not the only consequence of the lack of early feedback in waterfall.

Another consequence that has been noted in numerous studies is that features that would prove to be unnecessary or unused with more frequent feedback end up specified, developed, tested and deployed, creating little or no value, and increasing costs and risks.[6]

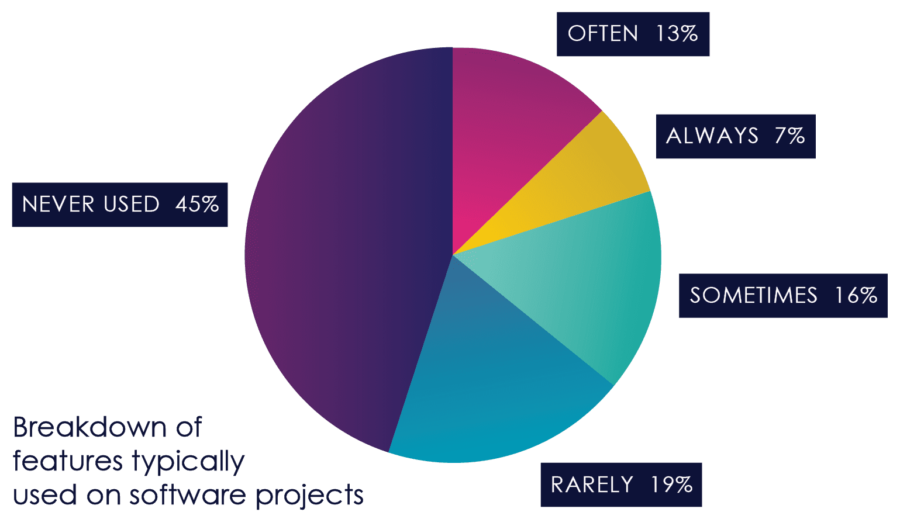

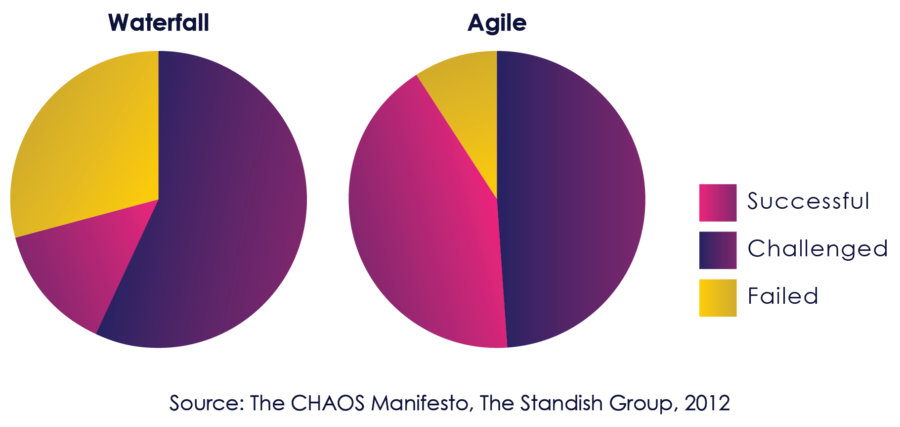

Figure 3, from the Standish Group CHAOS Report, Illustrates this dynamic.

Figure 03: Feature Usage

If change is inevitable, and waterfall is ill-suited for change, is there a better way?

The shortcomings of waterfall development are not new and have been generally accepted in the software community for a long time. Agile methodologies emerged as approaches to address these shortcomings in the early 1990s. They were harmonized in 2001 through the Agile Manifesto and were widely adopted in large enterprises across many industries during the mid to late 2000s.

Agile does not attempt to lock down all requirements upfront. It assumes that the requirements and design will evolve and change as more is learned and discovered during the process.

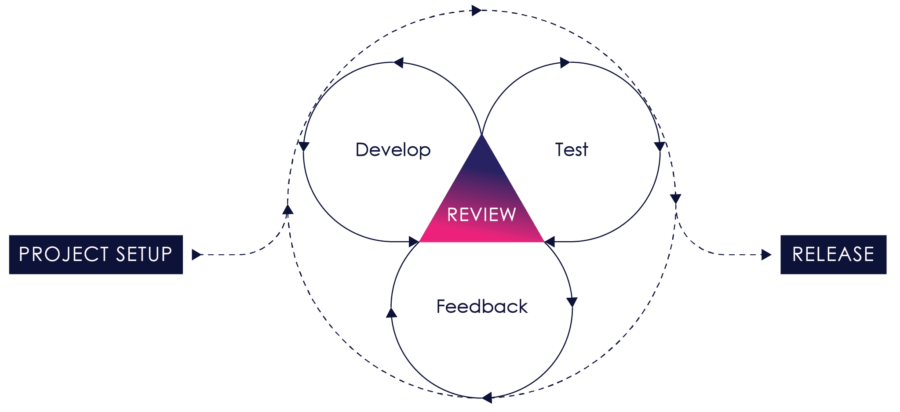

Figure 04: iterative Process

It works by breaking projects down into user stories – little bits of user functionality that create value for the user – prioritizing them, continuously delivering them in short one to three-week cycles called iterations, and getting feedback on the working software to incorporate back into the development process.

Requirements, design, development, testing, risk management and user feedback are all part of the iterative process. Instead of treating each of these as fixed phases, agile explicitly incorporates feedback so that as you learn, the product and process can evolve and change.

The benefits are easy to see:

Traditionally, change has been shunned on software projects because of it’s high perceived cost, especially late in the game. Agile, by contrast, contends that the cost of change curve can be relatively flat if you reduce the feedback loop, the time between creating something and validating it.

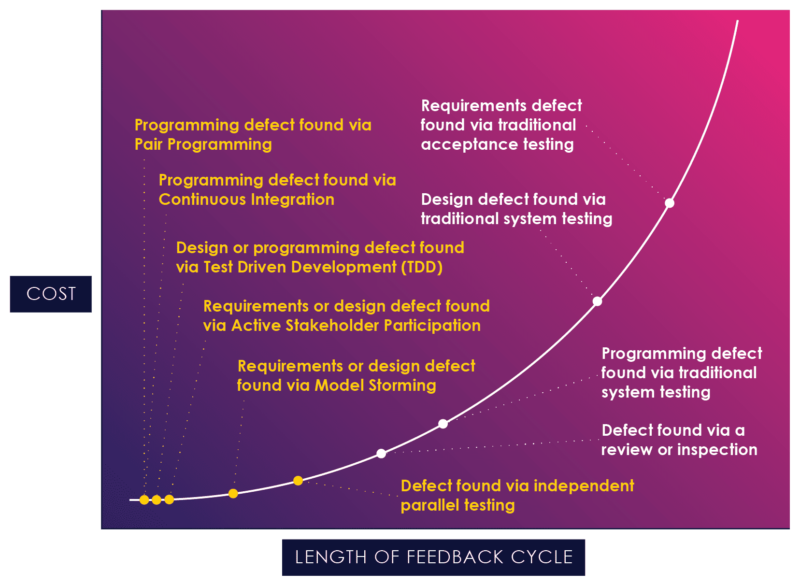

Agile includes a number of practices, such as pair programming, test-driven development and continuous integration which reduce this feedback.

Figure 04: The Application of Agile Techniques

Figure 4, a chart borrowed from Scott Ambler, illustrates how agile practices can reduce the feedback cycle and act earlier in the process, at the flat end of the curve.[7]

If agile practices are consistently applied, the cost of change curve is flattened significantly:

Figure 05: The Cost of Change in Agile vs. Waterfall

When Agile methodology was first introduced, there was understandable resistance to an unproven new approach. As Agile began to be adopted by large organizations across many industries, many studies were done to analyze agile’s effectiveness.

The Standish Group CHAOS report, published annually since 1994, is one of the most respected studies of the state of software projects. It has consistently shown a high correlation between agile and success since enterprise adoption started taking hold in the mid-2000s.

Figure 06: Comparative Success of Agile and Waterfall

By 2012, the Standish Group used their strongest language yet to describe the benefits of Agile:

“The agile process is the universal remedy for software development project failure. Software applications developed through the agile process have three times the success rate of the traditional waterfall method and a much lower percentage of time and cost overruns.” [8]

Other studies, including some in the medical device industry, have shown consistent improvements in quality and project success.[9]

Agile methodology was originally inspired by Lean Manufacturing practices that arose out of the Toyota Production System (TPS), practices that have revolutionized manufacturing. Core principles of lean manufacturing that have parallels in agile include:

Over the last 15 years, agile has been adopted and shown better results in many industries, including many with mission-critical software requiring a high degree of safety and reliability such as aerospace[10], defense[11] and energy[12].

But if agile methods produce better results, why has the medical device and diagnostic industry been slow to adopt agile? Is the methodology just not suitable for medical products?

Objections to agile in the medical device industry have generally centered on three main concerns:

As we shall see, each of these concerns is based on a misconception. The reality is that agile methodology, appropriately applied, improves reliability, safety and effectiveness, is compatible with quality system regulations, and is in fact recognized as a consensus standard by the FDA.

Let’s tackle these issues one at a time.

FDA does not prescribe waterfall. In fact, it recognizes Agile as a consensus standard.

While many industry professionals believe that FDA regulations require waterfall, neither the FDA’s 21 CFR Part 820 Quality System Regulation nor other regulations derived from it prescribe a particular development methodology. The confusion arises because many of the standards such as IEC 62304 that the FDA does recognize are written in a way that suggests waterfall.[13]

In fact, the FDA explicitly cautions against using waterfall for complex devices:

“The Waterfall model’s usefulness in practice is limited, for more complex devices, a concurrent engineering model is more representative.”[14]

Despite the barriers to agile adoption, a number of firms in the industry, including Orthogonal, have recognized the value of agile, and have adapted the methodology to comply with both the letter and the spirit of applicable standards and guidelines, and have used it to develop and launched FDA cleared and approved products.

In order to provide clarity and guidance on aligning Agile at both the concept and practical level, the AAMI Medical Device Software Committee formed the Agile Software Task Group which produced AAMI TIR45: 2012, Guidance on the use of AGILE practices in the development of medical device software[15]. AAMI TIR45 covers key topics such as documentation, evolutionary design and architecture, traceability, verification and validation, managing changes and “done” criteria.

The US FDA recognized AAMI TIR45 in January of 2013.[16] This served to provide clarity and direction on mapping Agile practices to regulatory requirements, and assurance that the Agile methodology can be aligned and used to develop software for medical devices according to compliance requirements.

Annex B of IEC 62304 provides a useful framework for standards associated with the safety of medical device software. On page 77 it states:

“There is no known method to guarantee 100% safety for any kind of software. There are three major principles which promote safety for medical device software:

Each of these principles must be addressed in adapting Agile to regulated medical product development.

Similar to other aspects of a software development process, not all risks can be known at the beginning of a project, and we discover most hazards as a system evolves. This is acknowledged in ISO 14971, the international standard for risk management systems for medical devices:

“Risk can be introduced throughout the product lifecycle, and risks that become apparent at one point in the life-cycle can be managed by action taken at a completely different point in the life cycle.”[17]

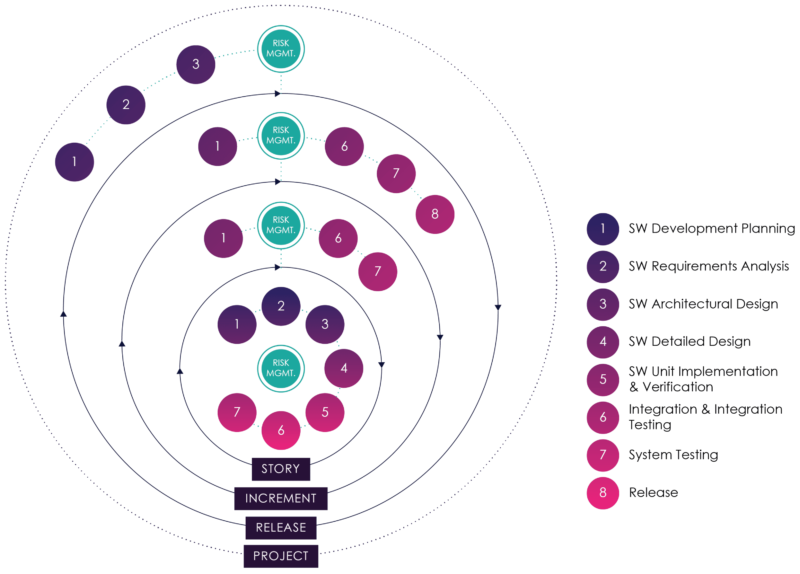

While AAMI TIR:45 does not specify how risk management activities should be integrated into the agile practices, our experience has been that this is best done by embedding risk management at each level of the lifecycle, with inputs and outputs from each activity within that level.

Hazard analysis, evaluation, and risk control are performed in each sprint/iteration. You maintain a backlog of requirements with associated risk scores for each.

For any story, increment or release, risk assessment becomes part of your definition of done: If new hazards have been introduced or existing hazards have been mitigated, the hazard analysis should be updated. User stories can be used to implement mitigations, and results can be reviewed during iteration demos and release reviews.

By embedding risk management throughout the process, you can discover and eliminate or mitigate more risks, and do so much earlier – during the flat part of the cost of the change curve.

This approach is illustrated in figure 7 (modified from AAMI TIR45: 2012)[18]:

Figure 07: Embedding Risk Management in Agile

In the typical product development lifecycle, release dates are fixed. In waterfall, since requirements and hazards that are discovered late cost more and take more time to implement or mitigate, they either greatly extend the project or are inadequately dealt with and tested.

Hazard response is difficult unless it’s done early. Agile allows fine-grained prioritization of features and reprioritization after every iteration. If you use risk-based prioritization of features and continuous risk assessment, you are much more likely to spend the right effort on the highest risk areas at a much lower cost.

Human Factors in medical software is becoming increasingly more important. With greater complexity, more integration and more ways to interface with devices come a “richness of opportunity for use error.”

Many of the risks for complex medical devices are directly related to human factors, including permitted misuses, user complacency, user interface confusion and user interface designs that are not adapted to user workflows.

The FDA’s expectations of use error are getting much more rigorous, and greater emphasis is being placed on comparative effectiveness as well as safety. Performing a few formative usability tests and dealing with most usability issues through labeling and training is no longer acceptable, neither is submitting “me too” products that are no better than the ones already in use.

Insufficient emphasis on user experience can also have major business consequences: Systems don’t get used if they are hard to use, don’t provide what the users want, when they want it, in a way that is actionable. And systems that don’t get used aren’t effective (and don’t result in future sales.)

Human Factors Engineers and User Experience Designers bring expertise, experience, talent and methodologies to a product team that can result in dramatic improvements to project speed, product quality and user satisfaction.

Agile can be adapted to incorporate human factors directly into the development process. User experience designers or human factors engineers create storyboards, task flows and wireframes, get feedback from users, and then create detailed designs with acceptance criteria that make it very clear for developers what they need to develop. They can then get usability feedback from end-users as increments are delivered by the software development team. Hazards related to human factors are surfaced earlier and mitigations can be designed and tested quickly.

Quality is one of the main aims of the agile methodology, and many of the core agile practices are designed to improve software quality while maintaining project velocity.

While traditional software development teams often spend 1/3 to 1/2 their time on defect troubleshooting and rework, agile practices are designed to catch and fix defects early, when they are easiest and cheapest to fix.

Agile practices such as user stories, unit tests, test-driven development, continuous integration and zero bug tolerance are designed to improve and maintain software quality. This allows teams to move much faster with fewer defects than in traditional waterfall.[19]

The agile methodology is supported by a number of practices that enable teams to improve quality, reduce risks and improve project velocity. These practices include:

Taken together, these processes are designed to improve quality and speed by leveraging feedback loops and automation to catch and correct defects early and often.

In its essence, the FDA Quality System Regulation requires you to have a repeatable process, follow it, and demonstrate that the process will produce a quality product.

The FDA requires evidence of design control and requires risk management to be integrated into the design process. Part of this process involves producing work plans, requirements specifications, hazard analysis, design documents, test cases, test plans, and test results as evidence of compliance.

Incorporating agile into a quality management system starts with the software development plan, documenting how you will:

In an agile process, documentation is updated on a per iteration or per increment basis, with some documentation updated automatically through the application of the agile process and development practices such as test-driven development.

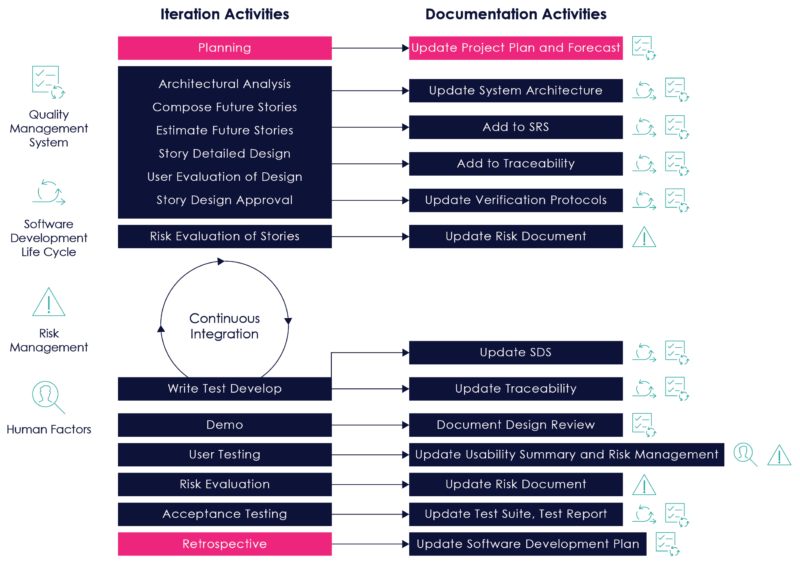

Figure 8 on the following page illustrates how documentation can be updated during the course of a development iteration:

Figure 08: Documentation Activities During an Iteration

In Agile, design reviews are a natural part of the process: Iteration reviews, demos, architectural reviews, and retrospectives are an integral part of every development iteration. Design reviews are also performed on an increment and release level. These frequent reviews help catch any issues early, resulting in far fewer surprises and issues at increment and release levels.

Software Verification provides objective evidence that the design outputs of a particular phase of the software development life cycle meet all of the specified requirements for that phase.

Software Validation is confirmation by examination and provision of objective evidence that software specifications conform to user needs and intended uses, and that the particular requirements implemented through software can be consistently fulfilled.[21]

In traditional waterfall, verification and validation activities often happen at the end, after all development is complete, which diverges from the FDA’s recommendations:

“[The FDA] does recommend that software validation and verification activities be conducted throughout the entire software lifecycle.”[22]

One of the biggest advantages of Agile is the integration of testing with development throughout the development process. Testing teams are full participants in requirements, architectural and design reviews, and collaborate with human factors engineers to create acceptance criteria for user stories and implement test cases. By testing stories as soon as they are implemented, testing teams uncover defects and discrepancies early in the process, when they are easiest to fix and have the least impact on other parts of the system. This process also has the benefit of parallelizing independent verification and validation with development, thereby compressing the schedule.

In Agile, verification starts at the user story level, providing traceability from user story to code, code to unit test, and user story to acceptance test. Stories are validated through acceptance tests and product demos. Acceptance tests, with descriptions of preconditions and expected behavior, can be executed by automated testing tools, which can be integrated into a continuous integration server to increase testing coverage. With certain tools, static analysis can also be automated as part of continuous integration. This automation helps teams execute tests more efficiently, generate test results automatically, and ensures the accuracy of tests. It also frees up testing teams to use other techniques like feature testing, exploratory testing, scenario testing and system testing.

At an increment level, verification includes traceability between the product requirements document and the Software Requirements Specification. Validation includes testing of features through feature acceptance tests. These can be automated using automated testing tools, and persist at the system level regression test repository, and should be included in the continuous integration environment once complete.

“Implementing an iterative development method within the organization has helped us build a structure for consistent deliverables and focus on where issues may be happening within a project much sooner than we may have otherwise.

This has helped us react during a time in the project when there was still some flexibility as to what types of intervention may make a difference in pulling a project back on track. On top of that, it has helped us find a better and earlier linkage in developing verification tests that can aid in identifying software issues earlier, rather than seeing issues for the first time during formal testing when the overhead of making a change is much more costly.”[23]

Thomas Price, Manager of Software Development, Covidien

Other tests, such as formative usability tests, can also be done at an increment level. Another strong advantage of agile is that these formative usability tests can be run with actual working software rather than prototypes. Feedback from these tests can be incorporated in risk analysis and user experience design activities to further mitigate risks and ensure that the product satisfies end-user needs.

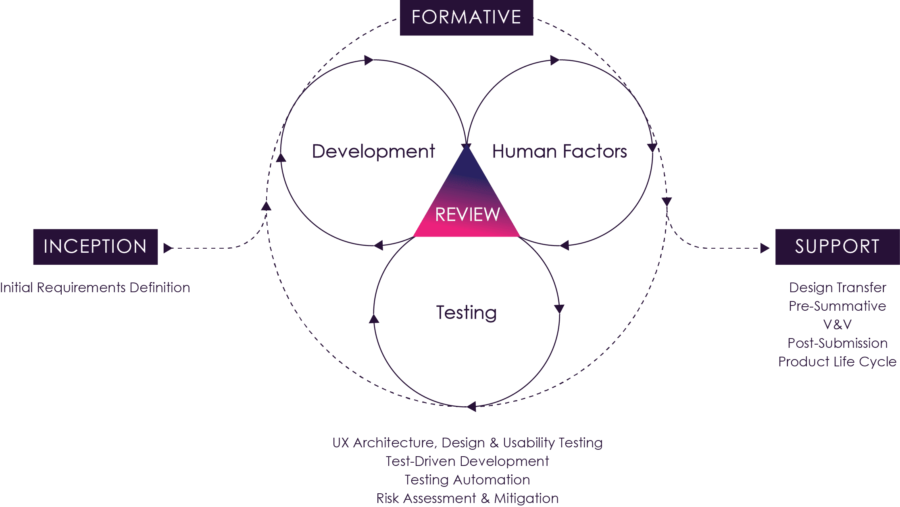

Figure 09: The Agile Process

Figure 9 above illustrates how these activities and feedback loops can be orchestrated. Typically detailed analysis, design and evaluation activities for a set of stories would be completed in before an iteration to maximize development efficiency during an iteration. Iteration level verification testing would be completed one iteration later. In the case of automated verification tests, these could be completed within the iteration.

Agile methodology is designed to get feedback early and often during the course of product design and use this feedback to continuously improve the product. This fast feedback cycle, coupled with agile practices such as test-driven development and continuous integration, allows product organizations to rapidly reduce requirements uncertainty, more closely align to user needs, and reduce risks, defects and wasted effort.

Many discussions of agile focus on the processes and activities of the product development organization and how these processes produce outputs such as user stories, wireframes, acceptance criteria and working software that can be used to get feedback to continuously improve a product.

With all of that emphasis on the development side, it’s easy to miss a key element of agile:

Agile requires rapid feedback from outside the development organization to be effective.

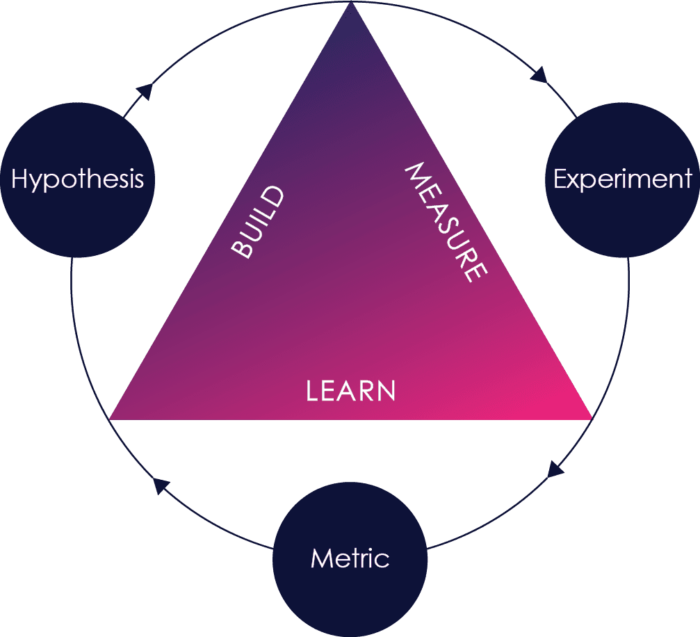

Think of agile as a build-measure-learn loop. When outside feedback happens early and often, defects and missing or incorrect requirements are caught earlier, risks are identified earlier, and it is much cheaper and faster to fix those problems.

When that feedback is missing or happens too infrequently, you have limited measurement and limited learning. Defects and missing or incorrect requirements are not caught or caught too late when it is much more expensive to fix them.

Making sure that the right feedback happens early and often is therefore one of the key challenges in successful agile adoption.

This is easier said than done. In FDA regulated environments, much of the feedback necessary comes from clinicians, marketers, customers and end-users, as well as risk management, human factors engineers and quality management, and the processes are not in place to enable fast feedback from these sources.

In our experience, there are a number of changes that can be implemented to enable faster feedback from outside the development organization:

One of the most important keys to getting the most out of agile methodology is to ensure fast feedback from outside of the development team.

One way to do this is to build cross-functional teams – bringing some functions that are outside the development team into the team so that it has all of the necessary skills to move a user story to completion.

In an FDA regulated environment, that includes not only developers, project managers and business analysts, but also human factors engineers, risk managers and quality assurance. This is a change from Waterfall, where these resources are typically engaged at the beginning and/or end of the project. In that situation, their knowledge of the project is limited, their ability to influence the project is limited, and when change inevitably occurs, they only have limited input. This often results in lower quality or rework late when change is most costly.

Bringing these resources onto the team provides them more direct knowledge, and enables the application of risk management and user experience design skills earlier in the process. Here’s how each of them interacts during development:

In our experience, this approach pays big dividends in terms of productivity, clarity of requirements, solution quality and project timelines.

There’s another part of fast feedback that needs to be addressed – users.

There are many excuses for not getting feedback from users and customers:

In our experience, the value of getting feedback from users far outweighs the risks and inconvenience.

As Figure 3 earlier in this book points out, 45% of software features that are built don’t get used, and 16% get used very rarely, either because they’re not that important to the users, or because of inadequate design. At the same time, important features are often missed, or discovered late in testing.

To put it another way: You never get it right the first time. Frequent feedback from users lets you iteratively improve the product, starting with prototypes and moving through development, preventing wasted effort on unnecessary features and making the features you do build usable and effective (and reducing human factors risks in the bargain.)

In order to make it happen, getting user feedback needs to be planned for and made part of the process. We recommend borrowing the concept of “Three User Thursdays” from Lean User Experience. In this model, every Thursday you bring in three users to talk to. You have a backlog of things to work with them on, from prioritization of features to task flows to testing alternative designs. There’s a role on the team for recruiting users and making sure they’re available for testing. Most of the testing can be informal (though documented), with formal testing at less frequent intervals.

If you plan for and execute this process, it can become quite efficient and more than makes up for the cost in reduced waste and product improvement.

While change, new discovery and learning are inevitable during any product development process, and agile processes reduce the cost and risk of change, there is a significant cost associated with design controls for development, whether you are using agile methods or not.

In a typical product development cycle, a high-level product concept or set of new features is given to an R&D organization, which defines detailed requirements and specifications before development begins.

There is still a lot of risk during this stage*:

* In this discussion, I use risk more broadly to include not just patient risk, but all risks to product effectiveness.

If you generate requirements and specifications without discovering and mitigating these risks, you will probably be building on invalid assumptions and either make major changes during development or compromise on product features and quality in order to make deadlines.

An agile/lean approach during this phase would spell out hypotheses and use fast feedback loops (“build-measure-learn loops”) to validate or invalidate those hypotheses, thereby dramatically reducing risk much more quickly and cost-effectively than during full development. In agile, experiments that involve building something in order to reduce uncertainty are called spikes. Two types of spikes are typically employed:

Just as with prototyping, technical spikes typically involve building just enough to validate or invalidate hypotheses, rather than building something that can be re-used and incorporated in the development process. For high-risk areas, this saves significant amounts of time and money by avoiding costly rework under full design controls.

Just as agile development benefits tremendously from having cross-functional teams participating throughout the process, so do research and development efforts involving functional and technical spikes. Risk analysis should be done at an early stage, and functional and technical spikes can be used to discover and mitigate patient risk – the results can be used to iteratively build the risk file and improve risk mitigation. Similarly, technical and architectural feedback is important during the prototyping process – without it, you are likely to end up with designs that are more costly and time consuming to implement.

Feedback helps you make your product better. The more good feedback you get, and the faster you get it, the faster you can incorporate that feedback to improve your product. The best feedback you can get is not from ideal devices used under ideal conditions, but rather from real use in real conditions. In other words, postmarket feedback.

Getting actual usage data and feedback quickly and reliably is important in any industry. But it’s becoming ever more important in medical devices and diagnostics:

Given all of these issues, the current state of the art for getting feedback in the medical device industry is not very active, real-time, nor accurate.

Much of post-market feedback for medical devices is still done the old fashioned way – users call the manufacturer to register complaints, the call is handled by a call where someone with limited expertise types in the complaint to the best of their ability. Eventually, product support tries to interpret these notes to see if an investigation is needed. If an investigation is initiated, it can be three weeks after the incident by the time the team starts getting feedback, by which time the incident is far less fresh in the minds of everyone involved.

This process is haphazard and based on very limited information. It’s a bit like the game of telephone. If you’ve seen the notes on these conversations, sometimes it’s a wonder anyone can make heads or tails of them, let alone make a determination on whether to deploy an investigative team or not. It is even more difficult to try to take this data and draw inferences on actual risk frequency and severity or to use that for future product improvement. As most support engineers will tell you, the most frequent thing that happens when devices are sent back and investigated after a complaint is “no problem found.”

The FDA has taken note of these issues and is working to improve their own postmarket monitoring surveillance. Their vision is “the creation of a national system that conducts active surveillance in near real-time using routinely collected electronic health information containing unique device identifiers, quickly identifies poorly performing devices, accurately characterizes the real-world clinical benefits and risks of market devices, and facilitates the development of new devices and new uses of existing devices through evidence generation, synthesis, and appraisal.” Their recent release of a mobile app for reporting adverse events, pilot programs like ASTER-D and timelines for implementation of Unique Device Identifiers (UDI’s) are steps along the way to that vision.

Given all of these issues, shouldn’t you have a better feedback mechanism for your own products than the FDA?

The good news is that with today’s always on, always connected technology, getting better feedback is very achievable.

The first element to getting better feedback is anonymized person-based analytics. Aggregate analytics packages like Google Analytics track frequency of actions across all instances of an application, rather than the paths that actual users take through your software. Anonymized person-based analytics tracks these actual paths and aggregates behavior by person, allowing you to much more easily see actual behavior trends and discover potential usability and or safety issues.

Anonymized person-based analytics can also very quickly help you find out if there are issues linked to third party software updates, hardware updates, networking, or OS upgrades.

I know of a number of instances where applications were not working for a month because of issues arising out of operating system upgrades before the manufacturers became aware that they had a problem, another month before they figured out what the problem was, another month before they had fixed it, and two more before the software was released to users.

If the manufacturer had implemented anonymized person-based analytics, they would have known there was an issue immediately and would have been able to diagnose it much quicker.

Anonymized person-based analytics are also key to product improvements, especially if you are speeding up product release cycles. They let you know what’s happening – what’s being used, what’s not, and where the drop-offs are. You can then use Three User Thursdays to help you understand why users are behaving the way they are and get feedback on software improvements to address design shortcomings. Built-in cohort analysis can then help you measure the effects of changes on usage patterns.

The second element to better postmarket feedback is built-in diagnostics based on hazard analysis.

Hazard Analysis is one of the major elements of an FDA regulated product development effort and a critical component of FDA submissions. This documentation gets built and modified throughout the product life cycle, through risk analysis, risk evaluation, risk control, evaluation of residual risk, risk management reporting and post-production information collection. It provides an identification of the potential risks, their frequency and severity and what is considered an acceptable risk, as well as risk control measures undertaken and residual risk.

This is structured information that can be used as the basis of diagnostic questionnaires. These diagnostic questionnaires can be made available through the medical device software itself, through related mobile and web applications, and can even be made available to call center staff when users call in. When coupled with snapshots of the state of the system and user behavior (from person-based analytics) for real-time, or near-real-time diagnostics of issues that are occurring to provide much richer inputs for risk monitoring and CAPA processes.

Because the data is structured and meaningful feedback can occur much more quickly than with a traditional approach, you have much better data to see if your original risk assessment was correct (in terms of both risk identification and frequency and severity), whether your risk controls are adequate, what corrective and preventative actions are required, and the effect of those CAPAs.

If you combine these two elements, you can dramatically enhance product quality and customer satisfaction, as well as minimizing exposure arising from adverse incidents.

Or you could have the FDA do the monitoring for you and just react to what they find…

Agile methodology, when properly adapted to the FDA’s quality systems regulations, can provide superior results to currently prevailing waterfall development methods, especially for complex systems with significant software components.

Agile methodology is designed to get feedback early and often during the course of product design and use this feedback to continuously improve the product. As a result, it is well suited to the development of complex systems with emergent requirements. The feedback loop can be adapted to incorporate risk management, human factors, verification and validation, and documentation of design controls. This fast feedback cycle, coupled with agile practices such as test-driven development and continuous integration, allow product organizations to:

Thus, companies able to adopt agile methodology give themselves a better opportunity to rise to the challenges posed by the rapidly changing regulatory, reimbursement and technology landscape and turn these to competitive advantage and market success.

1. Understanding Barriers to Medical Device Quality, FDA’s Center for Devices and Radiological Health (CDRH), October 2011 p 28. https://www.fda.gov/files/about%20fda/published/Understanding-Barriers-to-Medical-Device-Quality-%28PDF%29.pdf

2. “The majority of companies have increased significantly in every measure related to the use of software in products. Interestingly, the life sciences industry (which includes medical device manufacturing) has increased the ratio of software engineers more than others, and 92% of respondents in that industry say they plan to further increase software use over the next five years”. Tech-Clarity Perspective: Developing Software-Intensive Products: Addressing the Innovation-Complexity Conundrum, 2012, pp. 4-5.

3. Understanding Barriers to Medical Device Quality, FDA’s Center for Devices and Radiological Health (CDRH), October 2011 p 3. https://www.fda.gov/OfficeofMedicalProductsandTobacco/CDRHReports/ucm277272.htm

4. Understanding Barriers to Medical Device Quality, FDA’s Center for Devices and Radiological Health (CDRH), October 2011 p 31. https://www.fda.gov/OfficeofMedicalProductsandTobacco/CDRHReports/ucm277272.htm

5. General Principles of Software Validation; Final Guidance for Industry and FDA Staff, January 2002, section 3.1. https://www.fda.gov/files/medical%20devices/published/General-Principles-of-Software-Validation—Final-Guidance-for-Industry-and-FDA-Staff.pdf

6. 2015 CHAOS Report, the Standish Group.

7. Why Agile Software Development Techniques Work: Improved Feedback, by Scott Ambler. https://www.ambysoft.com/essays/whyAgileWorksFeedback.html

8. 2012 CHAOS Manifesto, the Standish Group.

9. Adopting Agile in an FDA Regulated Environment, Rod Rasmussen, Tim Hughes, J.R. Jenks, John Skach of Abbott Labs, pp.151-155, 2009 Agile Conference, 2009.

10. Agile Development Methods for Space Operations, Jay Trimble and Chris Webster, NASA Ames Research Center.

11. Adopting Agile in the U.S. Department of Defense.

12. GE Becomes More Agile, Rachel King, Wall Street Journal, May 30, 2012.

13. “It is easiest to describe the processes in this standard in a sequence, implying a ‘waterfall’ or ‘once-through’ life cycle model. However, other life cycles can also be used. Some development (model) strategies as defined at ISO/IEC 12207 [9] include (see also Table B.1): … Evolutionary: The ‘evolutionary’ strategy also develops a SYSTEM in builds but differs from the incremental strategy in acknowledging that the user need is not fully understood and all requirements cannot be defined up front. In this strategy, customer needs and SYSTEM requirements are partially defined up front, then are refined in each succeeding build.” IEC 62304 – Medical device software – Software life cycle processes, 2006. Annex B, pp. 75-76.

14. Design Control Guidance for Medical Device Manufacturers, US Food and Drug Administration, p.5

15. AAMI TIR45:2012 Guidance on the use of AGILE practices in the development of medical device software, Association for the Advancement of Medical Instrumentation, August 2012.

16. Recognized Consensus Standards, January 15th, 2013, U.S. Food and Drug Administration – Recognition Number 13-36: AAMI TIR45:2012, Guidance on the use of AGILE practices in the development of medical device software. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfstandards/detail.cfm?standard__identification_no=30575

17. ISO 14971: 2007 Medical devices — Application of risk management to medical devices. https://www.iso.org/standard/38193.html

18. AAMI TIR45:2012 Guidance on the use of AGILE practices in the development of medical device software, Association for the Advancement of Medical Instrumentation, August 2012. Figure 4- Mapping IEC 62304’s activities into Agile’s incremental/evolutionary lifecycle, p. 23.

19. Beyond the Hype: Evaluating and Measuring Agile, Quantitative Software Management, Inc., 2011.

20. FURPS+ is a model for classifying requirements. FURPS is an acronym that stands for Functionality, Usability, Reliability, Performance and Supportability. The + in FURPS+ stands for design constraints, implementation requirements, interface requirements and physical requirements. For a more detailed discussion of FURPS+, see https://www.ibm.com/developerworks/rational/library/4706.html

21. General Principles of Software Validation: Final Guidance for Industry and FDA Staff, , January 2002.

22. General Principles of Software Validation: Final Guidance for Industry and FDA Staff, , January 2002, p. 1.

Bernhard Kappe is founder and president of Orthogonal, where he applies his expertise in lean startup and agile development to help medical companies launch successful products faster. He has a passion for helping companies deliver more from their innovation pipelines and for launching software that makes a difference in the lives of patients, physicians, and caregivers.

He speaks on agile and product innovation at a number of medical device and health it conferences, and blogs on medical software development at https://devorthogonal.wpengine.com/insights/

He can be reached via:

Email: bkappe@devorthogonal.wpengine.com

LinkedIn: https://www.linkedin.com/in/bernhardkappe

Twitter: @bernhardkappe

Phone: 312-372-1058 x 6002

Related Posts

Article

Roundup: Bluetooth Medical Devices Cleared by FDA in 2024

Article

Help Us Build an Authoritative List of SaMD Cleared by the FDA

Article

SaMD Cleared by the FDA: The Ultimate Running List

Article

Roundup: Bluetooth Medical Devices Cleared by FDA in 2023