Article

Case Study: Capturing Quality Images for ML Algorithm

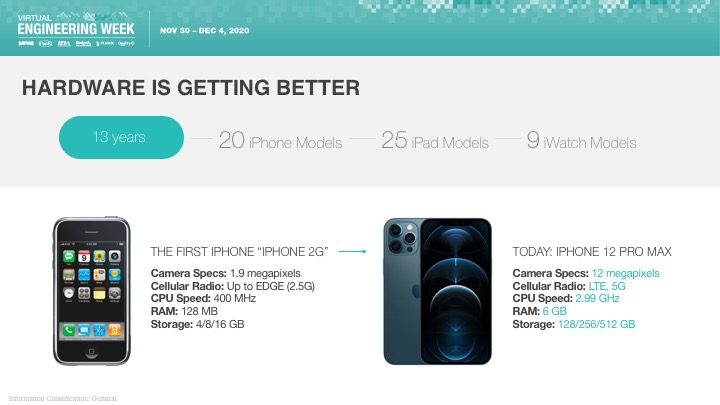

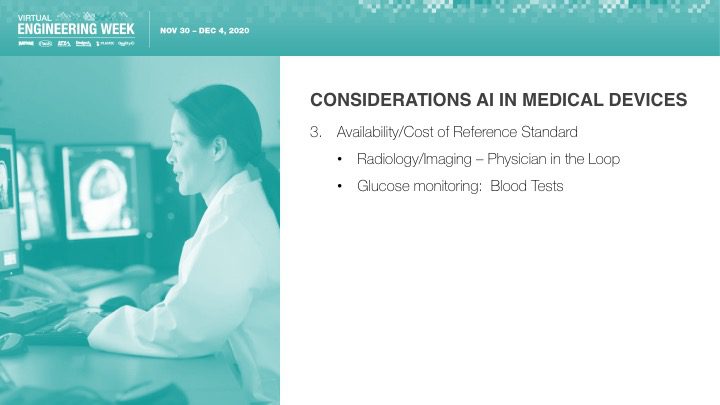

Orthogonal was excited that Bernhard Kappe, our CEO and Founder, spoke at Virtual Engineering Week on Friday, December 4th, 2020 on the topic of AI & Machine Learning in Medical Devices: It’s Getting Better All the Time. This talk was hosted by Informa and their Medical Device + Diagnostic Industry (MD+DI) media property. (Shout outs to Laurie Lehmann and Naomi Price for setting this up and guiding us through the process.)

If you missed the talk and want to check it out, or you did catch the talk and want more or want to share it, you have a few options:

Related Posts

Article

Case Study: Capturing Quality Images for ML Algorithm

Article

Help Us Build an Authoritative List of SaMD Cleared by the FDA

Article

SaMD Cleared by the FDA: The Ultimate Running List

White Paper

Software as a Medical Device (SaMD): What It Is & Why It Matters